1. SQL Server 2008 R2 Parallel Computing Edition

One huge change is the Parallel Computing

edition (formerly code-named Madison), essentially the SQL Server

equivalent to Windows Server High Performance Computing (HPC) Edition.

It will utilize a Massively Parallel Processing (MPP) scale-out

architecture to support data warehousing ranging from 10TB to 1+ PB. The

Parallel Computing Edition is priced at $57,489 per processor.

2. SQL Server 2008 R2 Datacenter Edition

The biggest change is undoubtedly the new

SQL Server 2008 R2 Datacenter Edition. This edition parallels Windows

Server Datacenter Edition, and with support from up to 256 logical

processors, it's designed to address the highest levels of scalability.

The Datacenter edition has no memory limitation and offers support for

more than 25 instances. It also will be priced at $57,489 per processor.

3. SQL Server 2008 R2 Enterprise Edition and Developer Edition

The Enterprise edition is the one that’s

targeted toward most businesses. With the advent of the new Datacenter

edition, the Enterprise edition will be limited to support for 64

logical processors and 25 instances, which really isn’t much of a

change. It will be priced at $28,749 per processor or $13,969 per server

with 25 CALs. The Developer edition shares the same feature set and is

licensed at $50 per developer.

4. SQL Server 2008 R2 Standard Edition

Designed for small and medium businesses,

the Standard edition supports up to 4 CPUs and 2TB of RAM, with

unlimited database size. It includes support for all BI subsystems

including Analysis Services, Integration Services, and Reporting

Services, but lacks many of the enterprise-oriented features from the

Enterprise edition. It will be priced at $7,499 per processor or $1,849

per server with five CALs.

Related: SQL Server 2008 R2 Appliances

5. SQL Server 2008 R2 Web Edition

This edition is designed to be run by web

hosting providers. It provides support for up to 4 CPUs, 2TB of RAM,

and unlimited database size. In addition to its relational database

engine, SQL Server 2008 R2 Web Edition supports Reporting Services. It's

priced at $15 per processor per month.

6. SQL Server 2008 R2 Workgroup Edition

Workgroup edition is designed to provide

basic relational database services for departments and branch offices.

It supports a maximum of 2 CPUs, 4GB of RAM, and unlimited database

size. Its only BI feature is Reporting Services. It's priced at $3,899

or $739 per server including five CALs.

7. SQL Server 2008 R2 Express Edition

Targeted toward developers and ISVs, SQL

Server Express Edition comes in three versions. SQL Server 2008 R2

Express provides relational database services; SQL Server 2008 R2

Express with Tools Edition adds SSMS; and SQL Server 2008 R2 Express

with Advanced Services adds SSMS and a local instance of Reporting

Services. All of the SQL Server 2008 R2 Express editions will continue

to be free downloads.

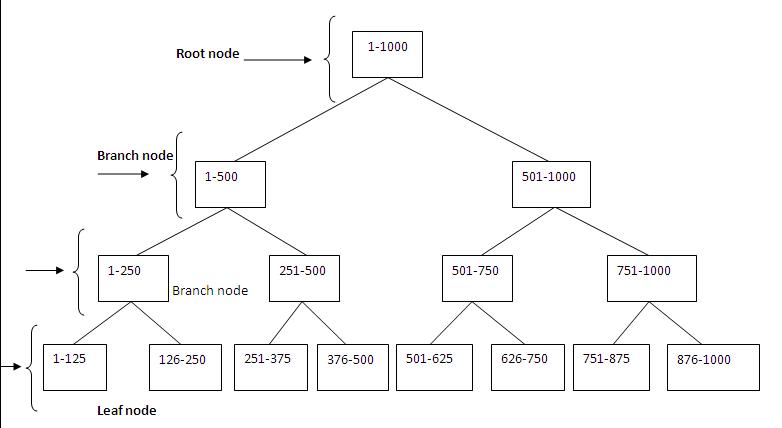

Leaf node

Consider the above B-Tree structure and we are searching a field with

value of 978. First the database engine would check the root node value

of 1 to 1000. Since the value is 978 it uses the branch node 501 to 1000

and navigates further to reach 751 to 1000. Finally it would navigate

further through the leaf node 876 to 1000 and find the desired record

978.

Thus by using the index there were only fewer number of reads. When

compared to scanning all the 1000 records to fetch the result, this

usage of index has reduced the IO to a large extent.

Clustered Index:

A clustered index stores the table data at the leaf page of the index

based on clustering key. Because the clustered index stores the data in

sorted order there is no need to rearrange the page in the index. There

can be only one clustered index in a table since the data is sorted.

Consider the analogy of a dictionary where the words are sorted

alphabetically and definitions appear next to the word. Similarly in a

clustered index CI, the leaf page contains the entire data/records and

is sorted.

NonClustered Index:

A nonclustered index is analogous to an index in the back of a book. We

can use the books index to locate pages that match an index entry. There

can be 249 nonclustered index NCI and 1 clustered index in any table.

If table does not have a clustered index, then its unsorted and is

called a HEAP. A NC created on a Heap contains pointers to table rows.

Each entry in the index page contains a row ID (RID). The RID is a

pointer to a table row in a heap. If the table has clustered index, the

index pages of a NCI contain the CI keys rather than RIDs. An index

pointer whether it is a RID or a CI key is called a lookup.

Scan and Seek:

The following are the operators related to indexes. They are available in the SQL Server query execution plans.

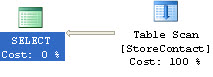

Table scan – A table scan results in reading the entire datas

in a table and returns the entire table or specific records. This is bad

for performance, if the table has numerous records doing a table scan

will affect the performance severely. In some cases if there are fewer

records its fine to have table scan.

So if you see that SQL Server has performed a Table Scan, take a note of

how many rows are in the table. If there arent many, then in this case,

a Table Scan is a good thing.

In the below query in adventureworks database we dont have an index on

customerid field and it results in table scan. Also when a Select * is

done in a table it will fetch the entire results and hence will do a

table scan.

Leaf node

Consider the above B-Tree structure and we are searching a field with

value of 978. First the database engine would check the root node value

of 1 to 1000. Since the value is 978 it uses the branch node 501 to 1000

and navigates further to reach 751 to 1000. Finally it would navigate

further through the leaf node 876 to 1000 and find the desired record

978.

Thus by using the index there were only fewer number of reads. When

compared to scanning all the 1000 records to fetch the result, this

usage of index has reduced the IO to a large extent.

Clustered Index:

A clustered index stores the table data at the leaf page of the index

based on clustering key. Because the clustered index stores the data in

sorted order there is no need to rearrange the page in the index. There

can be only one clustered index in a table since the data is sorted.

Consider the analogy of a dictionary where the words are sorted

alphabetically and definitions appear next to the word. Similarly in a

clustered index CI, the leaf page contains the entire data/records and

is sorted.

NonClustered Index:

A nonclustered index is analogous to an index in the back of a book. We

can use the books index to locate pages that match an index entry. There

can be 249 nonclustered index NCI and 1 clustered index in any table.

If table does not have a clustered index, then its unsorted and is

called a HEAP. A NC created on a Heap contains pointers to table rows.

Each entry in the index page contains a row ID (RID). The RID is a

pointer to a table row in a heap. If the table has clustered index, the

index pages of a NCI contain the CI keys rather than RIDs. An index

pointer whether it is a RID or a CI key is called a lookup.

Scan and Seek:

The following are the operators related to indexes. They are available in the SQL Server query execution plans.

Table scan – A table scan results in reading the entire datas

in a table and returns the entire table or specific records. This is bad

for performance, if the table has numerous records doing a table scan

will affect the performance severely. In some cases if there are fewer

records its fine to have table scan.

So if you see that SQL Server has performed a Table Scan, take a note of

how many rows are in the table. If there arent many, then in this case,

a Table Scan is a good thing.

In the below query in adventureworks database we dont have an index on

customerid field and it results in table scan. Also when a Select * is

done in a table it will fetch the entire results and hence will do a

table scan.

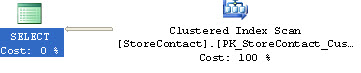

Clustered Index scan This is nothing but tables scan in a table

which has Clustered index. Since the clustered index leaf page contains

the data itself, performing a clustered index scan will scan all the

entire records which will affect performance. But as I mentioned earlier

if the records are fewer it wouldnt affect much.

For the below query the optimizer does an Clustered index scan to

retrieve the records,

Clustered Index scan This is nothing but tables scan in a table

which has Clustered index. Since the clustered index leaf page contains

the data itself, performing a clustered index scan will scan all the

entire records which will affect performance. But as I mentioned earlier

if the records are fewer it wouldnt affect much.

For the below query the optimizer does an Clustered index scan to

retrieve the records,

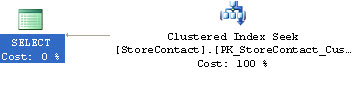

Clustered index seek A seek will retrieve only selective

records from a table when compared to scan which will traverse all the

records in a table. If the seek operation is done using a clustered

index its a clustered index seek. Basically, any seek operation will

vertically traverse through the B-Tree and fetch the records.

Consider the below query for which I created a clustered index on

Customerid field. The optimizer uses the clustered index and performs a

clustered index seek.

Clustered index seek A seek will retrieve only selective

records from a table when compared to scan which will traverse all the

records in a table. If the seek operation is done using a clustered

index its a clustered index seek. Basically, any seek operation will

vertically traverse through the B-Tree and fetch the records.

Consider the below query for which I created a clustered index on

Customerid field. The optimizer uses the clustered index and performs a

clustered index seek.

The clustered index seek will traverse through the records where the

customerid=322 and fetch the output. When compared to table scan which

will traverse through all the records, an index seek is very helpful in

reading the number of records quickly and is good for performance.

Index scan Since a scan touches every row in the table whether

or not it qualifies, the cost is proportional to the total number of

rows in the table. An index scan is nothing but a scan on the

nonclustered index. When index scan happens, all the rows in the leaf

level are scanned.

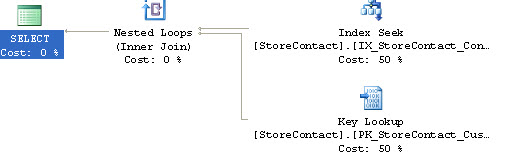

Index seek An index seek uses the nonclustered index to seek

the records in a table and is considered better for performance if there

is high selectivity.

For the below query the optimizer does an index seek using the NC index

on contacted field. Since the NC covers only the contactid it will not

be able to fetch all the records with an index seek alone. So its uses

seek to fetch the records which have contactid=322 and then does a key

lookup using the clustered index key to fetch the other fields records.

The clustered index seek will traverse through the records where the

customerid=322 and fetch the output. When compared to table scan which

will traverse through all the records, an index seek is very helpful in

reading the number of records quickly and is good for performance.

Index scan Since a scan touches every row in the table whether

or not it qualifies, the cost is proportional to the total number of

rows in the table. An index scan is nothing but a scan on the

nonclustered index. When index scan happens, all the rows in the leaf

level are scanned.

Index seek An index seek uses the nonclustered index to seek

the records in a table and is considered better for performance if there

is high selectivity.

For the below query the optimizer does an index seek using the NC index

on contacted field. Since the NC covers only the contactid it will not

be able to fetch all the records with an index seek alone. So its uses

seek to fetch the records which have contactid=322 and then does a key

lookup using the clustered index key to fetch the other fields records.

The key lookup is an expensive operation if there are numerous records.

Since key lookup increases as the IO we might have to avoid it in some

cases. Index with included columns can help to overcome this situation

and cover the entire query and in turn

The key lookup is an expensive operation if there are numerous records.

Since key lookup increases as the IO we might have to avoid it in some

cases. Index with included columns can help to overcome this situation

and cover the entire query and in turn